From Assembly Lines to AI: The Revolution of Manufacturing Over the Last 100 Years

The past century has witnessed the most profound and rapid transformation in human production since the dawn of the Industrial Revolution. Manufacturing, the engine of the global economy, has evolved from a process reliant on manual labor and mechanical machines to one driven by digital data, artificial intelligence, and global interconnectedness. The journey from the roaring 1920s to the present day is a story of relentless innovation, fundamentally altering not only what we produce but how we work, live, and relate to the world. This evolution can be understood through three pivotal shifts: the age of mass production, the age of automation and globalization, and the current dawn of the smart, digital factory.

The First Paradigm: The Age of Mass Production and the Assembly Line (1920s-1960s)

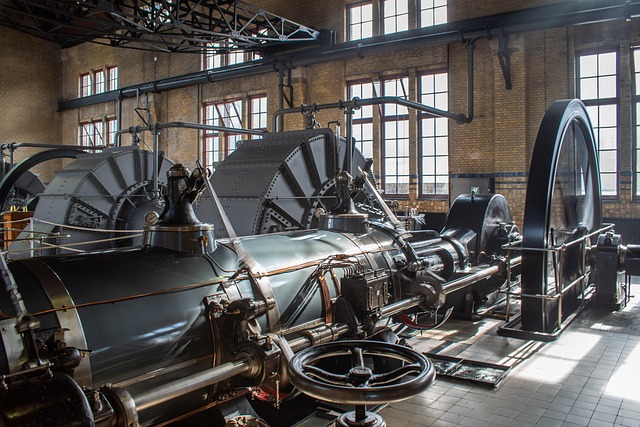

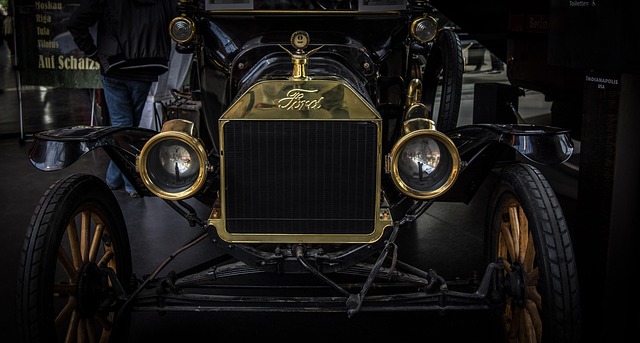

The manufacturing world of the early 20th century was poised for change. While the Second Industrial Revolution had introduced electricity and steel, production was still often batch-based and craft-oriented. This changed decisively with the perfection and proliferation of the moving assembly line, pioneered by Henry Ford. This was not merely an invention but a revolutionary system.

Ford’s innovation was to bring the work to the worker, drastically reducing the time and effort required to assemble a complex product like the automobile. The Model T, once a luxury item, became affordable for the masses. This era cemented the principles of mass production: standardization, specialization, and economies of scale. Factories became sprawling complexes dedicated to producing a single, uniform product at unprecedented volumes. The societal impact was immense, creating a booming middle class with stable, well-paying factory jobs and fueling consumerism by making goods like appliances and cars widely accessible.

This system, however, had its limitations. It was incredibly rigid. A production line designed for the Model T could produce nothing else, making changeovers slow and costly. The work was often monotonous and dehumanizing for the labor force. Furthermore, quality control was a reactive process—inspectors identified defects at the end of the line, leading to significant waste. This model, while powerful, was ripe for disruption by more flexible and efficient methodologies.

The Second Paradigm: The Age of Automation, Quality, and Globalization (1970s-1990s)

The post-war era introduced new ideas and technologies that began to challenge the Fordist model. The rise of Japan as a manufacturing powerhouse introduced Western companies to new philosophies, most notably Toyota’s Production System(TPS), which evolved into Lean Manufacturing.

Where mass production focused on volume, Lean focused on value and the elimination of ‘muda’ (waste)—waste of time, materials, and effort. Concepts like ‘Just-In-Time’ (JIT) inventory, which minimized costly stockpiles of parts, and *Jidoka* (automation with a human touch), which empowered any worker to stop the line to fix a problem, revolutionized efficiency. This shifted quality control from a reactive to a proactive and integrated process, vastly improving product reliability.

Concurrently, technology began to automate the shop floor. The development of the Programmable Logic Controller (PLC) in the 1970s provided a rugged, computer-based way to control machinery and assembly lines, allowing for greater flexibility than hard-wired relay systems. This was followed by the widespread adoption of Computer Numerical Control (CNC) machines, which could be programmed to perform precise machining tasks with minimal human intervention, enabling the high-accuracy production of complex parts.

This period also saw the seeds of globalization sown. Advances in containerized shipping and international logistics, combined with rising labor costs in the West, encouraged companies to seek manufacturing capacity in developing nations. This began the shift from national industrial ecosystems to complex, interconnected global supply chains.

The Third Paradigm: The Digital Revolution and the Rise of Smart Manufacturing (2000s-Present)

The turn of the millennium marked the beginning of the most radical transformation, fueled by the digital revolution. This era is defined by the integration of the physical and digital worlds, a convergence often called Industry 4.0.

The foundational element of this new age is data. Sensors on machines, products, and even workers generate a constant stream of real-time information. This Internet of Things (IoT) connectivity allows for an unprecedented level of visibility and control. Key technologies defining this era include:

Additive Manufacturing (3D Printing): Moving entirely away from subtractive methods (carving a block of material), 3D printing builds objects layer-by-layer from digital models. This allows for incredible design freedom, rapid prototyping, and cost-effective customisation, upending traditional tooling and inventory needs.

Advanced Robotics and AI: Modern robots, often collaborative (cobots) and designed to work safely alongside humans, are equipped with AI and machine vision. They can learn tasks, adapt to variability, and perform complex quality checks, moving beyond the repetitive, caged roles of the past.

The Digital Twin: Companies can now create a virtual, digital replica of a physical asset, process, or system. This model can be used to simulate, predict, and optimize performance before any change is made in the real world, saving immense time and resources.

These technologies coalesce into the Smart Factory—a fully connected and flexible facility that can self-optimize performance across a network. It can adapt in real-time to new demands, predict and prevent failures through predictive maintenance, and run a mass customization model where products are tailored to individual customers without sacrificing efficiency.

The Human and Societal Impact

The human role in manufacturing has evolved in tandem. The manual laborer of the 1920s has given way to the knowledge worker of the 2020s. Today’s factory floor requires skills in data analysis, robotics programming, and systems management. This shift has created a significant skills gap, challenging educational systems and companies to reskill the workforce for a new era.

Furthermore, the hyper-globalized supply chain model, while efficient, has revealed vulnerabilities, as seen during the COVID-19 pandemic and trade disputes. This is prompting a strategic rethink, with many companies exploring reshoring or near-shoring and investing in supply chain resilience through greater localisation and digital tracking.

Conclusion: A Century of Continuous Revolution

From the deafening roar of Henry Ford’s first moving line to the near-silent hum of a 3D printer creating a custom medical implant, manufacturing has undergone a century of breathtaking change. The driving force has shifted from pure mechanical innovation to digital intelligence, from centralized mass production to distributed, personalized creation. The factory has evolved from a place of brute force to a network of intelligent systems. As we look to the future, driven by AI and sustainability imperatives, one constant remains: manufacturing will continue to be a primary shaper of our economic and social reality, relentlessly reinventing itself for the next hundred years.